White Paper on the technical workflow for the Modeling a Racial Caste System

Corpus Creation

Getting the Scan

The legislative scope for this project is the time period from 1865 to 1968. This was the range selected to encompass a post-Civil War legislative era showing the inception and evolution of Jim Crow laws. The team’s approach to obtaining scans relied on Hathi Trust which is a digital repository of books and other materials contributed to by academic institutions around the world. It offered the MRCS project access to many digitized volumes of Virginia laws from the Jim Crow era. However, not all volumes were available in HathiTrust or met the high-resolution standards needed for optimal OCR processing. In order to fill these gaps, the team conducted in-house scanning using high resolution bulk scanning equipment and was able to compile a database of all Virginia laws from 1865 to 1968.

Processing and Image Conversion

The scans obtained from HathiTrust were typically downloaded in a TIF format. TIF is a high-resolution format and has a large file size. Due to the large size, these images were converted to JPEG format for more efficient processing without compromising the resolution needed for OCR.

Each scanned image was organized in a specific directory structure to ensure that the OCR processing pipeline can access them efficiently. The MRCS project used an ‘images’ directory that contained volumes (or folders) representing individual physical books on the shelf, organized by years, such as '1865-66' or '1884es' for some session volumes. Then, within each volume’s directory, there is an originals folder that holds all the initial scans without any OCR or additional processing. These originals folder is where all images to be processed are placed. Each image found in the original folder has a specific naming convention following this formula:

VAactsofassembly_{volume}_{page number, padded with 5 digits}.{extension}

For example: VAactsofassembly_1865-66_00003.jpg

Cropping Paratextual Information

Now that all the scans are ready for OCR processing, it is crucial to make sure that only the main text of laws were extracted. This is excluding the paratextual information such as page numbers, headers, marginalia, footers, watermarks, etc… The goal is to achieve a clean, text focused image for optimal OCR processing.

To prepare the scans for OCR processing, it was crucial to ensure that only the main text of the laws was extracted, excluding paratextual information such as page numbers, headers, marginalia, footers, and watermarks. The goal was to achieve clean, text-focused images for accurate OCR processing.

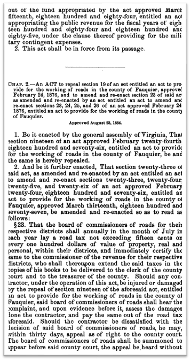

Below the sample on the left represents an uncropped page with all its components intact, the right represents a page where paratextual information is cropped away leaving only the relevant text.

The process to crop paratextual text involved several steps. This process was done in python and the code can be found on the MRCS github page.

The Scripts

There are, in total, 7 scripts in the MRCS_code_package on github.

flow.py:

- Orchestrates the processing of volumes.

crop.py:

- Handles image processing tasks and crops images based on detected contours.

- Uses utility functions from crop_functions.py.

crop_functions.py:

- Provides utility functions for image processing.

status.py:

- Generates a status report on the processing of image volumes.

splitter.py

- Splits text files of laws into sentences and organizes them into structured formats.

text_tools.py:

- Focuses on tools and utilities for text processing.

- Might be used for handling text extraction or manipulation related to the volume data.

ocr.py:

- Contains functions related to slower or more complex processing tasks.

- Includes the single function which processes a single volume, interacting with crop.py.

The Cropping Process

The process to crop paratextual text involved several steps. This process was done in python and the code can be found on the MRCS github page.

The core functionality begins with flow.py which includes the function single () that processes and crops individual volumes. The single() function calls crop() from crop.py which takes a volume identifier and a list of image paths as inputs, performs contour detection, and crops the image based on detected contours. The function then saves the cropped images into its assigned folder: issues, cropped, original. Flow.py also contains the function ocr_all_cropped_volumes() which applies the same processes as single() but to all volumes in the images folder.

The crop.py script uses many functions defined in the crop_functions.py file. These functions conduct contour detection and image cropping. Once these scripts are run and contour detection is complete, the function, main_bbox(), is run to calculate the bounding box. A bounding box is used to isolate the main text for OCR.

Below is a detailed explanation of the cropping process:

Initialization:

- The function starts by initializing an empty dictionary (imgs_dict) and a DataFrame (imgs_df) to store information about each processed image.

- The path_list containing paths to the scanned images is sorted for sequential processing.

- Loop through path_list:

- Image Reading: Each image in the path_list is read using cv2.imread(path), which loads the image from the path.

- Contour Extraction: The function get_contours(img, dil_iter) is called to identify contours within the image. Dilation (dil_iter) is applied to ensure that small gaps in the text blocks are closed, making it easier to detect the full boundary of the text.

- Bounding Box Calculation: The main_bbox(img, c_df, x_buffer, y_buffer) function calculates the bounding box of the main text block, focusing only on the central text and excluding marginalia, headers, footers, and watermarks.

- Error Handling: If there is an issue finding the bounding box, the function prints an error message and skips to the next image.

- Initial Cropping: The image is initially cropped to the bounding box, isolating the central text block.

- Marginalia Check and Removal:

- The function checks for the presence of marginalia using check_for_marginalia(cropped). If marginalia are detected, the function attempts to remove it using remove_child_marginalia(cropped, dil_iter), adjusting the bounding box to exclude these elements.

- Second Round of Cropping:

- After removing marginalia, the function further refines the cropping by applying a second round of adjustments with crop_round2(cropped, dil_iter). This step ensures that any remaining unwanted elements (like small headers or footers) are excluded, leaving only the central text block.

- Error Handling and Saving:

- If any errors occur during processing, the original image is saved to an “issues” directory for further inspection. Otherwise, the cropped image is saved to a “cropped” directory.

- The original image, whether processed successfully or not, is moved to an “originals” directory to keep the workspace organized.

The crop function automates the extraction of the main text block from scanned book pages, precisely removing paratextual information. This precision is achieved by carefully adjusting the parameters dil_iter, x_buffer, and y_buffer. Each parameter influences how well the central text is isolated from unwanted elements like marginalia, headers, and footers.

Parameters and Their Impact

The parameters dil_iter, x_buffer, and y_buffer determine the accuracy and effectiveness of the cropping process. Different settings affect the outcomes, allowing for more precise adjustments tailored to the specific characteristics of the scanned images. The default values for the MRCS project may not apply to all image types.

Below is a description of what the parameter settings change and output.

- dil_iter (Dilation Iterations = 20 by default):

- Purpose: Dilation iterations control how much the contours (boundaries) in the image are expanded. This expansion helps in closing small gaps within the text blocks and connecting broken parts of text, which might otherwise lead to incomplete or fragmented contours.

- Increasing dil_iter: The contours become more robust, potentially merging closely spaced text lines or characters. This can be helpful in noisy images where the text is fragmented or where the boundaries are not clearly defined. If increased too much, it might cause the merging of separate text blocks (like paragraphs) or even include unwanted elements like marginalia or headers into the main bounding box. This could lead to a less precise cropping.

- Decreasing dil_iter: The contours become less robust, potentially leading to more accurate boundary detection for tightly spaced text. This is particularly useful if the text is already well-separated from other elements like marginalia or if the scanned image is of high quality. Lower dilation might fail to connect all parts of a text block, especially if the text is faint, broken, or has small gaps. This could result in incomplete cropping or the exclusion of relevant text.

x_buffer (Horizontal Buffer = 20 by default):

- Purpose: The x_buffer parameter adds a margin of space to the left and right sides of the detected bounding box around the text block. This ensures that the cropped image does not cut off any text that might be close to the edge of the bounding box.

- Increasing x_buffer: The cropped image will include more horizontal space around the text block. This can help capture any text that is close to the edges of the bounding box, preventing it from being cut off. If increased too much, it might include unnecessary white space or even unwanted elements like marginalia, which could reduce the precision of the cropping process.

- Decreasing x_buffer: The cropped image will have a tighter fit around the text block, reducing the amount of horizontal space included. If decreased too much, there’s a risk of cutting off text that is close to the edges, leading to incomplete or clipped text in the cropped image.

y_buffer (Vertical Buffer = 10 by default):

- Purpose: Similar to x_buffer, the y_buffer parameter adds vertical space to the top and bottom of the detected bounding box. It ensures that the cropped image does not cut off any text that might be close to the top or bottom edges.

- Increasing y_buffer: The cropped image will include more vertical space around the text block, capturing any text that might be near the top or bottom edges of the bounding box. If increased too much, it might include additional elements like headers or footers, or simply add unnecessary white space, reducing the precision of the cropping process.

- Decreasing y_buffer: The cropped image will have a tighter vertical fit around the text block, reducing the amount of vertical space included. Similar to x_buffer, reducing y_buffer too much could result in cutting off text that is close to the top or bottom edges, leading to incomplete or clipped text.

Prepping for OCR (Optical Character Recognition)

After the cropping process, some images required manual adjustments due to errors or unique layouts that the automated cropping scripts could not process. The team adjusted the parameters for 5000 images which fixed 80% of the images with issues. Over 1000 of them had to be manually cropped using photoshop and about 100 images had to be rescanned and processed.

The MRCS team used Tesseract OCR for this project, and it needs to be properly installed and configured for the OCR process to work. Tesseract is a widely-used OCR engine that has extensive documentation which can be found here: Tesseract Documentation.

To check if Tesseract is properly installed, run “tesseract --version” on the device’s terminal.

Running the OCR

To execute the OCR process, run the ocr_cropped_volume() function from ocr.py with the volume of cropped images as its argument. This function applies OCR to each image in the volume, converting the visual text into a searchable, editable format.

Finding Laws

To identify laws within the processed text, the process_laws() function in flow.py takes a specific volume passed as an argument and applies a pattern-matching approach to extract relevant legal texts from the OCR outputs. This matching approach is tailored to the formatting changes in Virginia laws over time such as the organizational framework restructuring that was implemented in 1950 (FAQs Code of Virginia: Virginia Code Commission).

- For Volumes Pre-1950: the function searches for laws using the pattern "Chap. ##." This pattern captures the chapter numbers typically used in earlier publications.

- For Volumes Post-1950: the function searches for laws using the updated pattern where laws are marked by "CHAPTER ##." This pattern adjustment accurately captures laws in more recent texts.

The process_laws() function is run across all volumes in the corpus, applying the appropriate pattern matching based on the year of publication.

Preparing the Corpus

After identifying and extracting the laws, the next step is to compile them into a cohesive aggregate corpus. The build_corpus() function consolidates the individual law entries into a single database which can be used for further analysis and study. This corpus is the foundation of the MRCS project and enables systematic examination of the identified laws.

Analysis

The Data

Transitioning from chapter-level to sentence-level precision enhanced the ability to identifying implicit biases in legal texts. The final corpus included over 730,000 sentences, allowing for detailed examinations of both explicit and covert legislative language.

Training Set Development

Creating a Virginia-specific training set was a critical step in preparing for supervised classification. The team identified 1,383 sentences as training examples which were then categorized into three groups:

- Jim Crow Laws (1): Sentences with clear discriminatory intent or language.

- Non-Jim Crow Laws (0): Neutral or unrelated sentences.

- Uncertain (2): Sentences flagged for further review and eventual reclassification into one of the above categories.

Key metadata fields included the law’s volume, chapter, reviewer notes, and whether it contained overt (explicit and clearly stated) or covert (implicit and subtle) discriminatory mechanisms. Randomized sampling from the corpus ensured diversity in the training data.

Supervised Classification

The analysis used machine learning models to classify sentences into Jim Crow and Non-Jim Crow categories. The team tested multiple algorithms, including:

- Random Forest: This model builds multiple decision trees and combines their results for accurate predictions. Key parameters include:

- N_estimators: Number of trees in the forest, balancing accuracy and computational cost.

- Max_depth: Controls tree depth, balancing detail capture and complexity.

- Class_weight: Adjusts class balance, useful for imbalanced datasets.

- Stochastic Gradient Descent Classifier (SGD): A fast, efficient model suited for large datasets. Key parameters include:

- Loss: Specifies the loss function, such as "log" for logistic regression.

- Penalty: Regularization to prevent overfitting (e.g., l2 or elasticnet).

- Learning_rate: Determines learning speed, with slower rates improving accuracy.

- Early_stopping: Prevents overfitting by halting training when performance stabilizes.

- Alpha: Regularization strength, controlling overfitting risk.

- Multinomial Naive Bayes: A simple, fast model effective for text classification based on word frequency. Key parameters include:

- Alpha: Smooths probabilities, managing infrequent words effectively.

- XGBoost (Extreme Gradient Boosting): A powerful, configurable model building trees iteratively. Key parameters include:

- Learning_rate: Step size for boosting iterations.

- Max_depth: Depth of trees for capturing patterns.

- Min_child_weight: Minimum instance weight for node splitting.

- Gamma: Minimum error reduction required for splitting.

- Colsample_bytree: Fraction of features used per tree.

- Scale_pos_weight: Balances class weights in imbalanced classes.

- Tree_method: Defines tree-building algorithms (e.g., "hist" for efficiency).

- DistilBERT: A distilled version of BERT, optimized for faster training with high accuracy. It uses transformer-based architecture to capture contextual nuances. Key parameters include:

- Batch_size: Number of samples per update; smaller sizes improve memory efficiency.

- Num_epochs: Number of dataset iterations during training, balancing learning and overfitting risk.

- Init_lr: Initial learning rate for gradual model updates.

- Num_warmup_steps: Gradual increase of learning rate in initial steps for stability.

After testing, XGBoost emerged as the most effective model, achieving a 93% accuracy rate on Virginia data. The team’s preprocessing pipeline, which included tokenization, stopword removal, and TF-IDF transformation, played a significant role in improving model performance.

The code can be found on the MRCS GitHub and a more detailed explanation of this process can be found in the MRCS Technical Document found on the MRCS website.

Observations from Model Outputs

- Temporal Trends:

- The frequency of explicitly discriminatory language peaked in the late 19th and early 20th centuries, declining after mid-century civil rights legislation.

- Covert discriminatory mechanisms, such as the use of terms like “qualified voter,” became more prevalent over time.

- Thematic Patterns:

- Discriminatory language frequently appeared in laws related to education, housing, voting rights, and labor.

- Confederate-related terminology also emerged as a distinct category.

- Challenges:

- OCR inconsistencies and ambiguous legal language occasionally hindered classification.

- Implicit biases required more sophisticated models to interpret subtle discriminatory cues.

Conclusion

The team provided a framework for analyzing the impact of Jim Crow laws in Virginia by developing a high-quality corpus and training machine learning models. This analysis shows how these laws changed over time and highlights their patterns, offering a better understanding of systemic racism in legislative history. Similar analyses can be done in other states or legal contexts using the customizable pipeline and modular workflow built by the MRCS team. Virginia's curated corpus serves as a valuable resource for scholars, policymakers, and advocates. By preserving the legacy of past injustices, the MRCS project lays the groundwork for future research and informed decision-making in the fight against systemic inequality.